category

Although evaluating the outputs of Large Language Models (LLMs) is essential for anyone looking to ship robust LLM applications, LLM evaluation remains a challenging task for many. Whether you are refining a model’s accuracy through fine-tuning or enhancing a Retrieval-Augmented Generation (RAG) system’s contextual relevancy, understanding how to develop and decide on the appropriate set of LLM evaluation metrics for your use case is imperative to building a bulletproof LLM evaluation pipeline.

This article will teach you everything you need to know about LLM evaluation metrics, with code samples included. We’ll dive into:

- What LLM evaluation metrics are, common pitfalls, and what makes great LLM evaluation metrics great.

- All the different methods of scoring LLM evaluation metrics.

- How to implement and decide on the appropriate set of LLM evaluation metrics to use.

Are you ready for the long list? Let’s begin.

What are LLM Evaluation Metrics?

LLM evaluation metrics are metrics that score an LLM’s output based on criteria you care about. For example, if your LLM application is designed to summarize pages of news articles, you’ll need an LLM evaluation metric that scores based on:

- Whether the summary contains enough information from the original text.

- Whether the summary contains any contradictions or hallucinations from the original text.

Moreover, if your LLM application has a RAG-based architecture, you’ll probably need to score for the quality of the retrieval context as well. The point is, an LLM evaluation metric assesses an LLM application based on the tasks it was designed to do. (Note that an LLM application can simply be the LLM itself!)

Great evaluation metrics are:

- Quantitative. Metrics should always compute a score when evaluating the task at hand. This approach enables you to set a minimum passing threshold to determine if your LLM application is “good enough” and allows you to monitor how these scores change over time as you iterate and improve your implementation.

- Reliable. As unpredictable as LLM outputs can be, the last thing you want is for an LLM evaluation metric to be equally flaky. So, although metrics evaluated using LLMs (aka. LLM-Evals), such as G-Eval, are more accurate than traditional scoring methods, they are often inconsistent, which is where most LLM-Evals fall short.

- Accurate. Reliable scores are meaningless if they don’t truly represent the performance of your LLM application. In fact, the secret to making a good LLM evaluation metric great is to make it align with human expectations as much as possible.

So the question becomes, how can LLM evaluation metrics compute reliable and accurate scores?

Different Ways to Compute Metric Scores

In one of my previous articles, I talked about how LLM outputs are notoriously difficult to evaluate. Fortunately, there are numerous established methods available for calculating metric scores — some utilize neural networks, including embedding models and LLMs, while others are based entirely on statistical analysis.

We’ll go through each method and talk about the best approach by the end of this section, so read on to find out!

Statistical Scorers

Before we begin, I want to start by saying statistical scoring methods in my opinion are non-essential to learn about, so feel free to skip straight to the “G-Eval” section if you’re in a rush. This is because statistical methods performs poorly whenever reasoning is required, making it too inaccurate as a scorer for most LLM evaluation criteria.

To quickly go through them:

- The BLEU (BiLingual Evaluation Understudy) scorer evaluates the output of your LLM application against annotated ground truths (or, expected outputs). It calculates the precision for each matching n-gram (n consecutive words) between an LLM output and expected output to calculate their geometric mean and applies a brevity penalty if needed.

- The ROUGE (Recall-Oriented Understudy for Gisting Evaluation) scorer is s primarily used for evaluating text summaries from NLP models, and calculates recall by comparing the overlap of n-grams between LLM outputs and expected outputs. It determines the proportion (0–1) of n-grams in the reference that are present in the LLM output.

- The METEOR (Metric for Evaluation of Translation with Explicit Ordering) scorer is more comprehensive since it calculates scores by assessing both precision (n-gram matches) and recall (n-gram overlaps), adjusted for word order differences between LLM outputs and expected outputs. It also leverages external linguistic databases like WordNet to account for synonyms. The final score is the harmonic mean of precision and recall, with a penalty for ordering discrepancies.

- Levenshtein distance (or edit distance, you probably recognize this as a LeetCode hard DP problem) scorer calculates the minimum number of single-character edits (insertions, deletions, or substitutions) required to change one word or text string into another, which can be useful for evaluating spelling corrections, or other tasks where the precise alignment of characters is critical.

Since purely statistical scorers hardly not take any semantics into account and have extremely limited reasoning capabilities, they are not accurate enough for evaluating LLM outputs that are often long and complex.

Model-Based Scorers

Scorers that are purely statistical are reliable but inaccurate, as they struggle to take semantics into account. In this section, it is more of the opposite — scorers that purely rely on NLP models are comparably more accurate, but are also more unreliable due to their probabilistic nature.

This shouldn't be a surprise but, scorers that are not LLM-based perform worse than LLM-Evals, also due to the same reason stated for statistical scorers. Non-LLM scorers include:

- The NLI scorer, which uses Natural Language Inference models (which is a type of NLP classification model) to classify whether an LLM output is logically consistent (entailment), contradictory, or unrelated (neutral) with respect to a given reference text. The score typically ranges between entailment (with a value of 1) and contradiction (with a value of 0), providing a measure of logical coherence.

- The BLEURT (Bilingual Evaluation Understudy with Representations from Transformers) scorer, which uses pre-trained models like BERT to score LLM outputs on some expected outputs.

Apart from inconsistent scores, the reality is there are several shortcomings of these approaches. For example, NLI scorers can also struggle with accuracy when processing long texts, while BLEURT are limited by the quality and representativeness of its training data.

So here we go, lets talk about LLM-Evals instead.

G-Eval

G-Eval is a recently developed framework from a paper titled “NLG Evaluation using GPT-4 with Better Human Alignment” that uses LLMs to evaluate LLM outputs (aka. LLM-Evals).

As introduced in one of my previous articles, G-Eval first generates a series of evaluation steps using chain of thoughts (CoTs) before using the generated steps to determine the final score via a form-filling paradigm (this is just a fancy way of saying G-Eval requires several pieces of information to work). For example, evaluating LLM output coherence using G-Eval involves constructing a prompt that contains the criteria and text to be evaluated to generate evaluation steps, before using an LLM to output a score from 1 to 5 based on these steps.

Let’s run through the G-Eval algorithm using this example. First, to generate evaluation steps:

- Introduce an evaluation task to the LLM of your choice (eg. rate this output from 1–5 based on coherence)

- Give a definition for your criteria (eg. “Coherence — the collective quality of all sentences in the actual output”).

(Note that in the original G-Eval paper, the authors only used GPT-3.5 and GPT-4 for experiments, and having personally played around with different LLMs for G-Eval, I would highly recommend you stick with these models.)

After generating a series of evaluation steps:

- Create a prompt by concatenating the evaluation steps with all the arguments listed in your evaluation steps (eg., if you’re looking to evaluate coherence for an LLM output, the LLM output would be a required argument).

- At the end of the prompt, ask it to generate a score between 1–5, where 5 is better than 1.

- (Optional) Take the probabilities of the output tokens from the LLM to normalize the score and take their weighted summation as the final result.

Step 3 is optional because to get the probability of the output tokens, you would need access to the raw model embeddings, which is not something guaranteed to be available for all model interfaces. This step however was introduced in the paper because it offers more fine-grained scores and minimizes bias in LLM scoring (as stated in the paper, 3 is known to have a higher token probability for a 1–5 scale).

Here are the results from the paper, which shows how G-Eval outperforms all traditional, non-LLM evals that were mentioned earlier in this article:

G-Eval is great because as an LLM-Eval it is able to take the full semantics of LLM outputs into account, making it much more accurate. And this makes a lot of sense — think about it, how can non-LLM Evals, which uses scorers that are far less capable than LLMs, possibly understand the full scope of text generated by LLMs?

Although G-Eval correlates much more with human judgment when compared to its counterparts, it can still be unreliable, as asking an LLM to come up with a score is indisputably arbitrary.

That being said, given how flexible G-Eval’s evaluation criteria can be, I’ve personally implemented G-Eval as a metric for DeepEval, an open-source LLM evaluation framework I’ve been working on (which includes the normalization technique from the original paper).

# Install

pip install deepeval

# Set OpenAI API key as env variable

export OPENAI_API_KEY="..."

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

from deepeval.metrics import GEval

test_case = LLMTestCase(input="input to your LLM", actual_output="your LLM output")

coherence_metric = GEval(

name="Coherence",

criteria="Coherence - the collective quality of all sentences in the actual output",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT],

)

coherence_metric.measure(test_case)

print(coherence_metric.score)

print(coherence_metric.reason)

Another major advantage of using an LLM-Eval is, LLMs are able to generate a reason for its evaluation score.

Prometheus

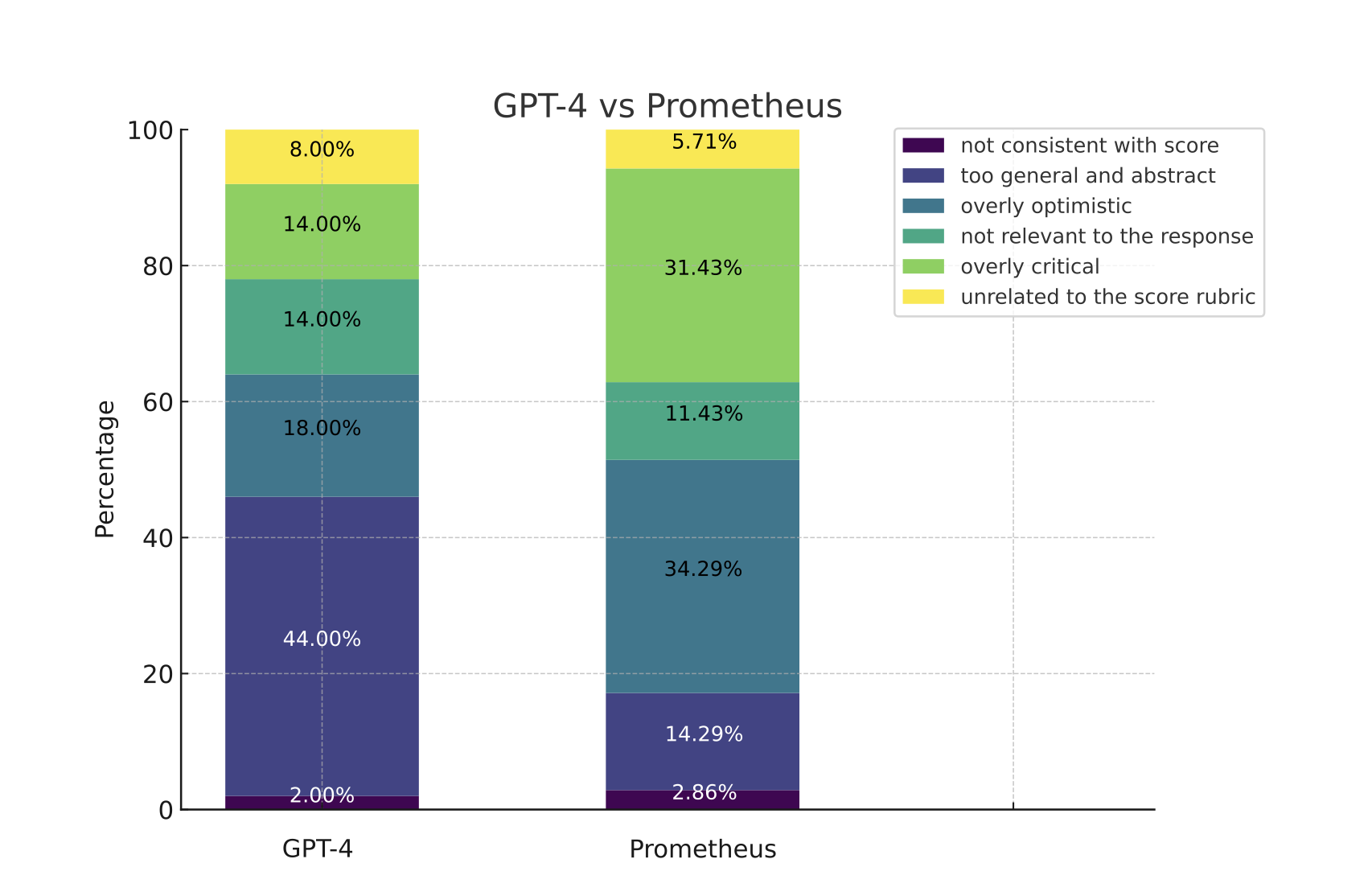

Prometheus is a fully open-source LLM that is comparable to GPT-4’s evaluation capabilities when the appropriate reference materials (reference answer, score rubric) are provided. It is also use case agnostic, similar to G-Eval. Prometheus is a language model using Llama-2-Chat as a base model and fine-tuned on 100K feedback (generated by GPT-4) within the Feedback Collection.

Here are the brief results from the prometheus research paper.

Prometheus follows the same principles as G-Eval. However, there are several differences:

- While G-Eval is a framework that uses GPT-3.5/4, Prometheus is an LLM fine-tuned for evaluation.

- While G-Eval generates the score rubric/evaluation steps via CoTs, the score rubric for Prometheus is provided in the prompt instead.

- Prometheus requires reference/example evaluation results.

Although I personally haven’t tried it, Prometheus is available on hugging face. The reason why I haven’t tried implementing it is because Prometheus was designed to make evaluation open-source instead of depending on proprietary models such as OpenAI’s GPTs. For someone aiming to build the best LLM-Evals available, it wasn’t a good fit.

Confident AI: Everything You Need for LLM Evaluation

An all-in-one platform to evaluate and test LLM applications, fully integrated with DeepEval.

.png)

LLM evaluation metrics for ANY use case.

.png)

Real-time evaluations and monitoring.

.png)

Hyperparameters discovery.

.png)

Manage evaluation datasets on the cloud.

Combining Statistical and Model-Based Scorers

By now, we’ve seen how statistical methods are reliable but inaccurate, and how non-LLM model-based approaches are less reliable but more accurate. Similar to the previous section, there are non-LLM scorers such as:

- The BERTScore scorer, which relies on pre-trained language models like BERT and computes the cosine similarity between the contextual embeddings of words in the reference and the generated texts. These similarities are then aggregated to produce a final score. A higher BERTScore indicates a greater degree of semantic overlap between the LLM output and the reference text.

- The MoverScore scorer, which first uses embedding models, specifically pre-trained language models like BERT to obtain deeply contextualized word embeddings for both the reference text and the generated text before using something called the Earth Mover’s Distance (EMD) to compute the minimal cost that must be paid to transform the distribution of words in an LLM output to the distribution of words in the reference text.

Both the BERTScore and MoverScore scorer is vulnerable to contextual awareness and bias due to their reliance on contextual embeddings from pre-trained models like BERT. But what about LLM-Evals?

GPTScore

Unlike G-Eval which directly performs the evaluation task with a form-filling paradigm, GPTScore uses the conditional probability of generating the target text as an evaluation metric.

SelfCheckGPT

SelfCheckGPT is an odd one. It is a simple sampling-based approach that is used to fact-check LLM outputs. It assumes that hallucinated outputs are not reproducible, whereas if an LLM has knowledge of a given concept, sampled responses are likely to be similar and contain consistent facts.

SelfCheckGPT is an interesting approach because it makes detecting hallucination a reference-less process, which is extremely useful in a production setting.

However, although you’ll notice that G-Eval and Prometheus is use case agnostic, SelfCheckGPT is not. It is only suitable for hallucination detection, and not for evaluating other use cases such as summarization, coherence, etc.

QAG Score

QAG (Question Answer Generation) Score is a scorer that leverages LLMs’ high reasoning capabilities to reliably evaluate LLM outputs. It uses answers (usually either a ‘yes’ or ‘no’) to close-ended questions (which can be generated or preset) to compute a final metric score. It is reliable because it does NOT use LLMs to directly generate scores. For example, if you want to compute a score for faithfulness (which measures whether an LLM output was hallucinated or not), you would:

- Use an LLM to extract all claims made in an LLM output.

- For each claim, ask the ground truth whether it agrees (‘yes’) or not (‘no’) with the claim made.

So for this example LLM output:

Martin Luther King Jr., the renowned civil rights leader, was assassinated on April 4, 1968, at the Lorraine Motel in Memphis, Tennessee. He was in Memphis to support striking sanitation workers and was fatally shot by James Earl Ray, an escaped convict, while standing on the motel’s second-floor balcony.

A claim would be:

Martin Luther King Jr. assassinated on the April 4, 1968

And a corresponding close-ended question would be:

Was Martin Luther King Jr. assassinated on the April 4, 1968?

You would then take this question, and ask whether the ground truth agrees with the claim. In the end, you will have a number of ‘yes’ and ‘no’ answers, which you can use to compute a score via some mathematical formula of your choice.

In the case of faithfulness, if we define it as as the proportion of claims in an LLM output that are accurate and consistent with the ground truth, it can easily be calculated by dividing the number of accurate (truthful) claims by the total number of claims made by the LLM. Since we are not using LLMs to directly generate evaluation scores but still leveraging its superior reasoning ability, we get scores that are both accurate and reliable.

Choosing Your Evaluation Metrics

The choice of which LLM evaluation metric to use depends on the use case and architecture of your LLM application.

For example, if you’re building a RAG-based customer support chatbot on top of OpenAI’s GPT models, you’ll need to use several RAG metrics (eg., Faithfulness, Answer Relevancy, Contextual Precision), whereas if you’re fine-tuning your own Mistral 7B, you’ll need metrics such as bias to ensure impartial LLM decisions.

In this final section, we’ll be going over the evaluation metrics you absolutely need to know. (And as a bonus, the implementation of each.)

RAG Metrics

For those don’t already know what RAG (Retrieval Augmented Generation) is, here is a great read. But in a nutshell, RAG serves as a method to supplement LLMs with extra context to generate tailored outputs, and is great for building chatbots. It is made up of two components — the retriever, and the generator.

Here’s how a RAG workflow typically works:

- Your RAG system receives an input.

- The retriever uses this input to perform a vector search in your knowledge base (which nowadays in most cases is a vector database).

- The generator receives the retrieval context and the user input as additional context to generate a tailor output.

Here’s one thing to remember — high quality LLM outputs is the product of a great retriever and generator. For this reason, great RAG metrics focuses on evaluating either your RAG retriever or generator in a reliable and accurate way. (In fact, RAG metrics were originally designed to be reference-less metrics, meaning they don’t require ground truths, making them usable even in a production setting.)

PS. For those looking to unit test RAG systems in CI/CD pipelines, click here.

Faithfulness

Faithfulness is a RAG metric that evaluates whether the LLM/generator in your RAG pipeline is generating LLM outputs that factually aligns with the information presented in the retrieval context. But which scorer should we use for the faithfulness metric?

Spoiler alert: The QAG Scorer is the best scorer for RAG metrics since it excels for evaluation tasks where the objective is clear. For faithfulness, if you define it as the proportion of truthful claims made in an LLM output with regards to the retrieval context, we can calculate faithfulness using QAG by following this algorithm:

- Use LLMs to extract all claims made in the output.

- For each claim, check whether the it agrees or contradicts with each individual node in the retrieval context. In this case, the close-ended question in QAG will be something like: “Does the given claim agree with the reference text”, where the “reference text” will be each individual retrieved node. (Note that you need to confine the answer to either a ‘yes’, ‘no’, or ‘idk’. The ‘idk’ state represents the edge case where the retrieval context does not contain relevant information to give a yes/no answer.)

- Add up the total number of truthful claims (‘yes’ and ‘idk’), and divide it by the total number of claims made.

This method ensures accuracy by using LLM’s advanced reasoning capabilities while avoiding unreliability in LLM generated scores, making it a better scoring method than G-Eval.

If you feel this is too complicated to implement, you can use DeepEval. It’s an open-source package I built and offers all the evaluation metrics you need for LLM evaluation, including the faithfulness metric.

# Install

pip install deepeval

# Set OpenAI API key as env variable

export OPENAI_API_KEY="..."

from deepeval.metrics import FaithfulnessMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

retrieval_context=["..."]

)

metric = FaithfulnessMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())

DeepEval treats evaluation as test cases. Here, actual_output is simply your LLM output. Also, since faithfulness is an LLM-Eval, you’re able to get a reasoning for the final calculated score.

Answer Relevancy

Answer relevancy is a RAG metric that assesses whether your RAG generator outputs concise answers, and can be calculated by determining the proportion of sentences in an LLM output that a relevant to the input (ie. divide the number relevant sentences by the total number of sentences).

The key to build a robust answer relevancy metric is to take the retrieval context into account, since additional context may justify a seemingly irrelevant sentence’s relevancy. Here’s an implementation of the answer relevancy metric:

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

retrieval_context=["..."]

)

metric = AnswerRelevancyMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())

(Remember, we’re using QAG for all RAG metrics)

Contextual Precision

Contextual Precision is a RAG metric that assesses the quality of your RAG pipeline’s retriever. When we’re talking about contextual metrics, we’re mainly concerned about the relevancy of the retrieval context. A high contextual precision score means nodes that are relevant in the retrieval contextual are ranked higher than irrelevant ones. This is important because LLMs gives more weighting to information in nodes that appear earlier in the retrieval context, which affects the quality of the final output.

from deepeval.metrics import ContextualPrecisionMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

# Expected output is the "ideal" output of your LLM, it is an

# extra parameter that's needed for contextual metrics

expected_output="...",

retrieval_context=["..."]

)

metric = ContextualPrecisionMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())

Contextual Recall

Contextual Precision is an additional metric for evaluating a Retriever-Augmented Generator (RAG). It is calculated by determining the proportion of sentences in the expected output or ground truth that can be attributed to nodes in the retrieval context. A higher score represents a greater alignment between the retrieved information and the expected output, indicating that the retriever is effectively sourcing relevant and accurate content to aid the generator in producing contextually appropriate responses.

from deepeval.metrics import ContextualRecallMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

# Expected output is the "ideal" output of your LLM, it is an

# extra parameter that's needed for contextual metrics

expected_output="...",

retrieval_context=["..."]

)

metric = ContextualRecallMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())

Contextual Relevancy

Probably the simplest metric to understand, contextual relevancy is simply the proportion of sentences in the retrieval context that are relevant to a given input.

from deepeval.metrics import ContextualRelevancyMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

retrieval_context=["..."]

)

metric = ContextualRelevancyMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())

Fine-Tuning Metrics

When I say “fine-tuning metrics”, what I really mean is metrics that assess the LLM itself, rather than the entire system. Putting aside cost and performance benefits, LLMs are often fine-tuned to either:

- Incorporate additional contextual knowledge.

- Adjust its behavior.

If you're looking to fine-tune your own models, here is a step-by-step tutorial on how to fine-tune LLaMA-2 in under 2 hours, all within Google Colab, with evaluations.

Hallucination

Some of you might recognize this being the same as the faithfulness metric. Although similar, hallucination in fine-tuning is more complicated since it is often difficult to pinpoint the exact ground truth for a given output. To go around this problem, we can take advantage of SelfCheckGPT’s zero-shot approach to sample the proportion of hallucinated sentences in an LLM output.

from deepeval.metrics import HallucinationMetric

from deepeval.test_case import LLMTestCase

test_case=LLMTestCase(

input="...",

actual_output="...",

# Note that 'context' is not the same as 'retrieval_context'.

# While retrieval context is more concerned with RAG pipelines,

# context is the ideal retrieval results for a given input,

# and typically resides in the dataset used to fine-tune your LLM

context=["..."],

)

metric = HallucinationMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.is_successful())

However, this approach can get very expensive, so for now I would suggest using an NLI scorer and manually provide some context as the ground truth instead.

Toxicity

The toxicity metric evaluates the extent to which a text contains offensive, harmful, or inappropriate language. Off-the-shelf pre-trained models like Detoxify, which utilize the BERT scorer, can be employed to score toxicity.

from deepeval.metrics import ToxicityMetric

from deepeval.test_case import LLMTestCase

metric = ToxicityMetric(threshold=0.5)

test_case = LLMTestCase(

input="What if these shoes don't fit?",

# Replace this with the actual output from your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

)

metric.measure(test_case)

print(metric.score)

However, this method can be inaccurate since words “associated with swearing, insults or profanity are present in a comment, is likely to be classified as toxic, regardless of the tone or the intent of the author e.g. humorous/self-deprecating”.

In this case, you might want to consider using G-Eval instead to define a custom criteria for toxicity. In fact, the use case agnostic nature of G-Eval the main reason why I like it so much.

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCase

test_case = LLMTestCase(

input="What if these shoes don't fit?",

# Replace this with the actual output from your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

)

toxicity_metric = GEval(

name="Toxicity",

criteria="Toxicity - determine if the actual outout contains any non-humorous offensive, harmful, or inappropriate language",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT],

)

metric.measure(test_case)

print(metric.score)

Bias

The bias metric evaluates aspects such as political, gender, and social biases in textual content. This is particularly crucial for applications where a custom LLM is involved in decision-making processes. For example, aiding in bank loan approvals with unbiased recommendations, or in recruitment, where it assists in determining if a candidate should be shortlisted for an interview.

Similar to toxicity, bias can be evaluated using G-Eval. (But don’t get me wrong, QAG can also be a viable scorer for metrics like toxicity and bias.)

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCase

test_case = LLMTestCase(

input="What if these shoes don't fit?",

# Replace this with the actual output from your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

)

toxicity_metric = GEval(

name="Bias",

criteria="Bias - determine if the actual output contains any racial, gender, or political bias.",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT],

)

metric.measure(test_case)

print(metric.score)

Bias is a highly subjective matter, varying significantly across different geographical, geopolitical, and geosocial environments. For example, language or expressions considered neutral in one culture may carry different connotations in another. (This is also why few-shot evaluation doesn’t work well for bias.)

A potential solution would be to fine-tune a custom LLM for evaluation or provide extremely clear rubrics for in-context learning, and for this reason, I believe bias is the hardest metric of all to implement.

Use Case Specific Metrics

Summarization

I actually covered the summarization metric in depth in one of my previous articles, so I would highly recommend to give it a good read (and I promise its much shorter than this article).

In summary (no pun intended), all good summaries:

- Is factually aligned with the original text.

- Includes important information from the original text.

Using QAG, we can calculate both factual alignment and inclusion scores to compute a final summarization score. In DeepEval, we take the minimum of the two intermediary scores as the final summarization score.

from deepeval.metrics import SummarizationMetric

from deepeval.test_case import LLMTestCase

# This is the original text to be summarized

input = """

The 'inclusion score' is calculated as the percentage of assessment questions

for which both the summary and the original document provide a 'yes' answer. This

method ensures that the summary not only includes key information from the original

text but also accurately represents it. A higher inclusion score indicates a

more comprehensive and faithful summary, signifying that the summary effectively

encapsulates the crucial points and details from the original content.

"""

# This is the summary, replace this with the actual output from your LLM application

actual_output="""

The inclusion score quantifies how well a summary captures and

accurately represents key information from the original text,

with a higher score indicating greater comprehensiveness.

"""

test_case = LLMTestCase(input=input, actual_output=actual_output)

metric = SummarizationMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

Admittedly, I haven’t done the summarization metric enough justice because I don’t want to make this article longer than it already is. But for those interested, I would highly recommend reading this article to learn more about building your own summarization metric using QAG.

Conclusion

Congratulations for making to the end! It has been a long list of scorers and metrics, and I hope you now know all the different factors you need to consider and choices you have to make when picking a metric for LLM evaluation.

The main objective of an LLM evaluation metric is to quantify the performance of your LLM (application), and to do this we have different scorers, with some better than others. For LLM evaluation, scorers that uses LLMs (G-Eval, Prometheus, SelfCheckGPT, and QAG) are most accurate due to their high reasoning capabilities, but we need to take extra pre-cautions to ensure these scores are reliable.

At the end of the day, the choice of metrics depend on your use case and implementation of your LLM application, where RAG and fine-tuning metrics are a great starting point to evaluating LLM outputs. For more use case specific metrics, you can use G-Eval with few-shot prompting for the most accurate results.

- 登录 发表评论